by Thorsten Matthias, Markus Wimplinger, Paul Lindner, Bioh Kim, Eric Pabo, Dustin Warren, EV Group

Executive overview

The advantages as well as the technical feasibility of through-silicon vias (TSV) have been acknowledged by the industry. Today, the major focus is on the manufacturability and on the integration of all the different building blocks for TSVs and 3D interconnects. In this paper, the advances in the field of lithography, thin wafer processing and wafer bonding, are presented, with an emphasis on the integration of all these process steps.

Copyright © 2009 by International Microelectronics And Packaging Society (IMAPS). Permission to reprint/republish granted from the 42nd International Symposium on Microelectronics (IMAPS 2009) Proceedings, pg. 563-568, November 1-5, 2009, San Jose McEnery Convention Center, San Jose, California. ISBN 0-930815-89-0.

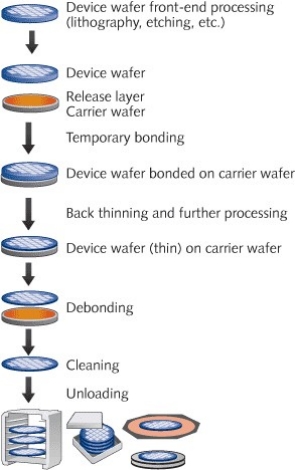

March 1, 2010 – Face-to-back integration schemes require the processing of thin wafers for both wafer-to-wafer and chip-to-wafer stacking. Prior to thinning, the device wafer is mounted on a carrier wafer with a temporary wafer bonding step. 300mm wafers with a thickness of 30μm have been successfully processed through the complete TSV process line.

Lithography on the backside of the thin device wafer requires alignment of the photo-mask to the alignment keys buried in the bond interface. After backside processing, the thin wafer is debonded from the carrier wafer. The thin wafer is either mounted on dicing tape for singulation and subsequent chip-to-wafer stacking, or it is bonded immediately to another device wafer for wafer-to-wafer stacking.

|

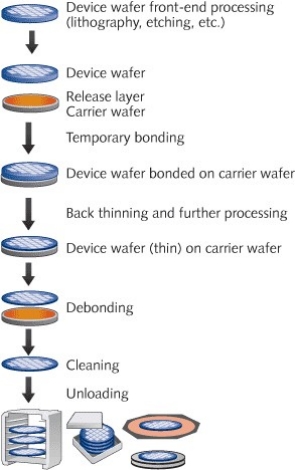

| Figure 1. Process flow of thin wafer processing by temporary bonding and debonding. |

For applications with very high TSV density, face-to-face integration schemes using Cu-Cu thermo-compression wafer bonding are a promising approach as the electrical contacts are established in parallel to the mechanical bond. Alternatively, fusion bonding is very attractive due to the cost-of-ownership advantages compared to metal-metal bonding. Recent equipment and process improvements enable sub-micron alignment accuracy on 300mm wafers.

3D integration and TSVs

Extensive research and development activities over many years have shown the feasibility as well as the technical advantages of through-silicon vias (TSV) and 3D integration. Many different manufacturing and integration schemes are being discussed and evaluated. Most or all of the individual process steps and building blocks have been successfully qualified. Today, industrial consortia such as EMC-3D focus on cost competitive manufacturability and on the integration of all the different building blocks for TSVs and 3D interconnects.

Vertical or 3D stacking of chips can be realized as chip-to-chip (C2C), chip-to-wafer (C2W) and wafer-to-wafer (W2W) manufacturing. The stacking of the chips itself can be realized as face-to-face or face-to-back integration [1]. Face-to-back integration requires wafer thinning and processing of the device wafer on the front- and backsides prior to permanent bonding of the dies or wafers.

Thin wafer processing

The ongoing demand for smaller and smaller devices requires minimizing the diameter of the TSVs. Although TSVs can be manufactured with quite extreme aspect ratios, the manufacturing costs are significantly lower for moderate or low aspect ratios of 1:5 up to 1:10. Therefore, small via diameters require thin device wafers.

Figure 1 shows the generic process flow for thin wafer processing with temporary bonding to a carrier wafer. The starting point is a device wafer with complete front-end processing on the frontside of the wafer. This device wafer is bonded to a carrier wafer with its frontside in the bond interface. After bonding the first step is back-thinning of the wafer. Usually, back-thinning is a multistep process consisting of mechanical back-grinding and subsequent stress relief etching and polishing. After back-thinning, the backside of the device wafer can be processed using standard wafer fab equipment. The carrier wafer gives mechanical support and stability and protects the fragile wafer edge of the thin wafer. Finally, when all backside processing is done, the wafer gets debonded, cleaned, and transferred to a film frame or to other output formats. Temporary bonding and debonding are enabling technologies for wafer-level processing of thin wafers. The main advantages of temporary bonding and debonding using a carrier wafer are compatibility with a number of processes and equipment, for example:

Standard fab equipment. The bonded wafer stacks literally mimic a standard wafer. The geometry of the bonded stack can be tailored in such a way that the resulting geometry is in accordance with SEMI. This brings the advantage that standard wafer processing equipment can be used without any modification. There is no need for special end-effectors, wafer chucks, cassettes, or pre-aligners. No downtime at all is required to switch between processing of standard thick wafers and temporarily bonded thin wafers.

Existing process lines. With the addition of only two pieces of equipment, the temporary bonder and the debonder, a complete process line or even fab becomes able to process thin wafers.

Existing processes. The mechanical and thermal properties of the bonded wafer stack are very similar to a standard thick wafer. This enables the use of existing wafer processing recipes, which have been proven and qualified for standard wafers.

Future process flows. The user has the full flexibility to change the processing sequence and the individual process steps for backside processing. After temporary bonding, the device wafer is securely protected against mechanical damage. Furthermore, adding process steps or modifying the process flow does not impact the cost of ownership for thin-wafer processing.

Product roadmaps. For many devices and products, the roadmaps lead to even thinner wafers in the future. With temporary bonding, the entire backside processing becomes independent of the wafer thickness. Reducing the wafer thickness does not require any modifications or adjustments to the processing equipment.

An important point is the choice of the carrier wafer. For silicon-based devices, the recommended carrier is a standard silicon wafer. First of all, with a silicon carrier, the resulting bonded stack mimics very closely a standard wafer. From a geometrical point of view, this enables the use of standard wafer processing equipment without modifications, whereas oversized carriers would require special wafer chucks and cassettes for the wafer stack.

Even more important are the thermal properties of the bonded stack. With a silicon carrier, the thermal expansion between device wafer and carrier is perfectly matched. Using a non-silicon carrier would cause the stack to bow and warp due to thermal expansion mismatch. There is the risk that the induced stress impacts the processing characteristics and ultimately the device performance. CTE-matched glass carriers create a different problem — metal ion contamination. It is not possible to use these glass carriers in CMOS fabs, which undermine one of the major advantages of the carrier wafer approach — the ability to process frontside and backside of the device wafer on the same equipment set.

Lithography for temporarily bonded wafers

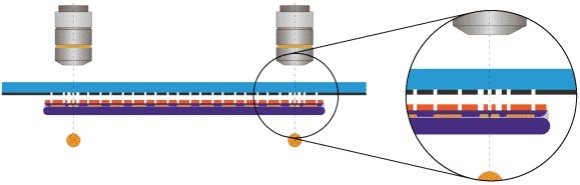

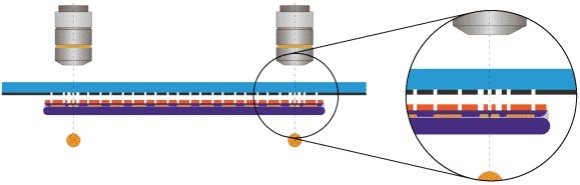

For many integration schemes, after thinning and polishing, the backside of the thin device wafer has to be patterned with one or more mask levels. Due to the similarity between a bonded stack and a single wafer, standard spin coating and developing processes can be applied. Features such as bond pads, pillars, and bumps are typically created on a mask aligner. The exposure of the resist coated surface requires the alignment of the mask to the features on the device wafer front side, which is buried in the bond interface. Modern mask aligners have integrated IR alignment capability for this application (Figure 2).

|

| Figure 2. Front-to-backside lithography for a thin device wafer bonded to a carrier wafer. The alignment keys on the device wafer front side are buried in the bond interface. The alignment to the mask is performed with infrared (IR) alignment. |

Permanent wafer bonding

There are three main wafer bonding methods for 3D interconnects: fusion (or molecular) bonding, adhesion thermo-compression bonding, and metal-metal thermo-compression bonding. In addition, there are hybrid methods such as simultaneous adhesive-metal bonding or simultaneous fusion-metal bonding. Each of these methods has advantages and disadvantages. Adhesion wafer bonding is not sensitive at all to particles; metal-metal thermo-compression bonding simplifies the process flow as the mechanical and electrical connections are established simultaneously in one process step [1].

Fusion wafer bonding is a two-step process consisting of room temperature pre-bonding and annealing at elevated temperature. The classical annealing schemes, which were developed for SOI wafer manufacturing, require annealing temperatures in the range of 800-1100°C. A surface pre-processing step, LowTemp plasma activation, enables the modification of the wafer surface in such a way that the annealing temperatures can be reduced to 200-400°C. Therefore, this type of plasma activation enables the use of fusion wafer bonding for 3D integration.

Fusion wafer bonding brings several advantages:

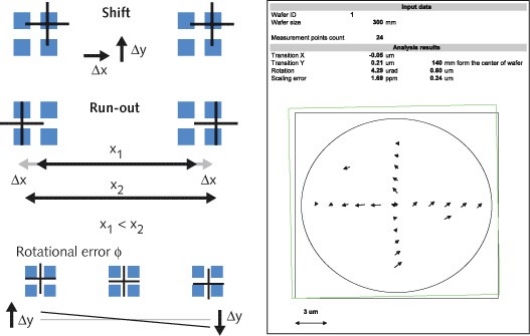

Alignment accuracy. By bonding at room temperature, bonding misalignment based on thermal expansion of the wafers is eliminated completely. Figure 3 shows alignment results with the EVG SmartView NT Aligner.

|

| Figure 3. Alignment results with the EVG SmartView NT Aligner: 400 alignments |

Due to the very good alignment accuracy, fusion wafer bonding is especially well suited for high density TSV devices. The ITRS roadmap for high density TSVs specifies via diameters of 0.8-1.5μm in 2012 [2]. Sub-micron post bond alignment accuracy is necessary for these devices.

Throughput. Fusion wafer bonding has the highest throughput compared to adhesive or metal-metal thermo-compression bonding because it is a room temperature process. It can be implemented either as an in situ bond process in the aligner module or as an ex situ process under vacuum in a bond module. The subsequent annealing can be performed as a batch process in a furnace or oven.

Inspection capability after pre-bonding prior to final annealing. After the room temperature pre-bonding step, the bond strength is sufficiently high to enable inspection of bond quality and alignment accuracy. In case of misalignment or bond quality problems, e.g., voids, the wafer pair can be separated and reworked. This concept of inspection and, if necessary, reworking prior to final annealing has been used in SOI wafer manufacturing for many years.

Cost-of-ownership. The combined effects of in situ bonding in the aligner module, highest throughput, increased yield due to the ability to rework and reduced capital costs results in low cost-of-ownership for manufacturing schemes based on fusion wafer bonding.

The primary challenges for fusion wafer bonding are the sensitivity to particles and the specifications for surface roughness. Any particle within the bond interface will create an un-bonded area, a void. The size of the void can be up to 1000 times larger than the particle itself. A void in the bond interface will not only damage the directly impacted dies, but it may even prevent back thinning and thereby cause loss of the entire wafer. To overcome this threat, modern wafer bonding systems include wafer cleaning modules within the bonding platform. This equipment configuration enables cleaning of the wafer surface immediately prior to wafer bonding. Because of the integrated cleaning modules and the ability to rework the bonded wafers, the sensitivity to particles is no longer perceived as an issue for high-volume manufacturing.

Fusion wafer bonding requires surface micro-roughness in the range of 0.5-1nm. These requirements can be met with modern CMP technology.

Bond alignment inspection

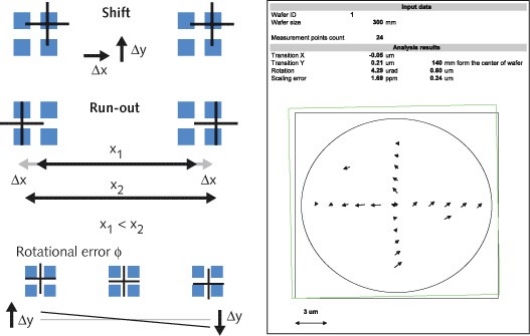

Post-bond alignment inspection is a critical process control step. A misaligned wafer bond can result in total loss of two fully processed wafers. Therefore, it is important to analyze all the contributing factors to the alignment accuracy. Figure 4 shows the different factors contributing to misalignment, as well as the process control output format for a bond alignment inspection system.

|

| Figure 4. Left side: Potential alignment errors: shift, rotational misalignment and run-out; the post-bond alignment is often an overlay of all three types. Right side: The post bond alignment inspection system EVG40 NT allows customer defined wafer mapping. |

Conclusion

In this paper, significant improvements in manufacturing processes and equipments have been presented. Thin wafer processing is becoming a mainstream process. Temporary bonding to a carrier wafer, thinning, backside processing and subsequent debonding have been qualified for several different process flows. Using a silicon wafer enables the use of standard fab equipment for thin wafer backside processing. The SmartView NT aligner allows alignment accuracy in deep sub-micron range. Fusion wafer bonding has some unique advantages for 3D integration namely alignment accuracy and throughput. Process control including post bond alignment inspection is critical for improved yield and cost-of-ownership.

Acknowledgments

LowTemp and SmartView are registered trademarks of EV Group.

References

[1]. P. Garrou, C. Bowers, P. Ramm (Eds.), "Handbook of 3D integration," Wiley, 2008.

[2]. www.itrs.net

Biography

Thorsten Matthias is director of business development at EV Group, Erich Thallner Strasse 1, 4782 St. Florian/Inn, Austria, e-mail: [email protected].